Salesforce has a flurry of new extensions coming up thanks to the launch of SalesforceDx 2017. Its niche services include sales, marketing, chatter, desk, and work. It has been catering to over 130k customers all over the world and millions of dedicated customers who rely on Salesforce for excellent user end features. The Salesforce API, web and mobile handles over 1 billion transactions per day.

Where is all the data coming from?

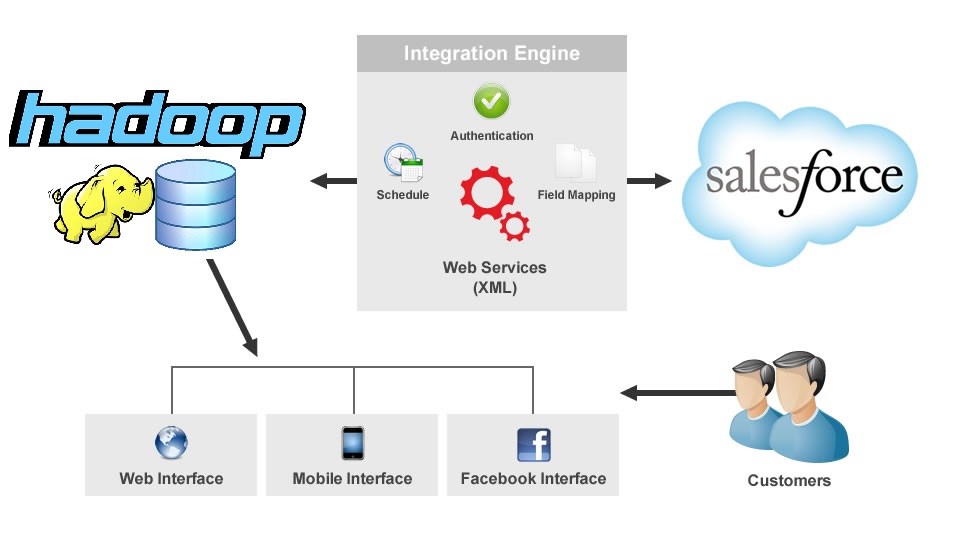

All event information comes from API use and login. Each login is an event, and all allied information serve to be a part of big data. Hadoop collects data from user id, organization id and different APIs, URIs and mobile details. IP addresses and response times also contribute to event data on Salesforce. Most Salesforce users use their logs on a regular basis.

Hadoop is possibly the most popular open source MapReduce technology of 2017. It can answer simply as well as the most complex questions on large data sets. Internal examples include capacity planning, product metrics and product examples as well. This includes Chatter File, search relevancy and user recommendations.

What Salesforce is doing with Hadoop right now

At Salesforce, Collaborative filtering is a simplified algorithm that will help you with file suggestions. You can take inspiration from modern algorithms like the Amazon’s item-to-item collaborative filtering algorithm to recommend Amazon items to the Chatter users. This will reduce the problem of cold start for any new user. You will get a better response if you run the algorithm on a Hadoop cluster as a Java MapReduce job. You can use similar collaborative filtering algorithms for many recommendation systems like Twitter, Facebook, Amazon and similar websites.

Hadoop is the technology of choice for many developers when processing big data. The combination of Hadoop, Java MapReduce, Pig, and Force.com gives users the perfect customization of instruments for managing large data sets. Measuring all forms of product metrics becomes a walk in the park with the right combination of the software and tools. You can treat yourself any data from reports on standard and custom objects on Salesforce. You can use your favorite analytical tool to draw conclusions from these results and reports.

The data transfer tools

Several popular data transfer tools will aid the transfer process from Salesforce to Hadoop. Almost all of them can handle high quantities of data. They have a few defining characters –

- The use scalar programming language – this is based on a Scalar. It makes interaction with Hadoop a lot easier for the data manager.

- Uses the KiteSDK library for setting up the salesforce2hadoop. It is a robust data transfer protocol.

- Includes Apache Avro to enable writing to HDFS. This makes schema evolution a lot easier.

Moreover, the data transfer process involves WSC. The process will reflect the components of your Enterprise WSDL of your organization.

Author Bio:

David Wicks is a developer and product leader. He is working with Hadoop and channelizing the complete integration of a multi-faceted platform like Salesforce with the help of Flosum.com.

Related Posts